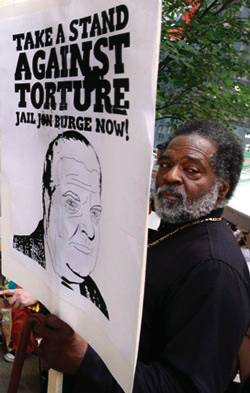

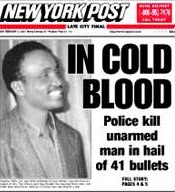

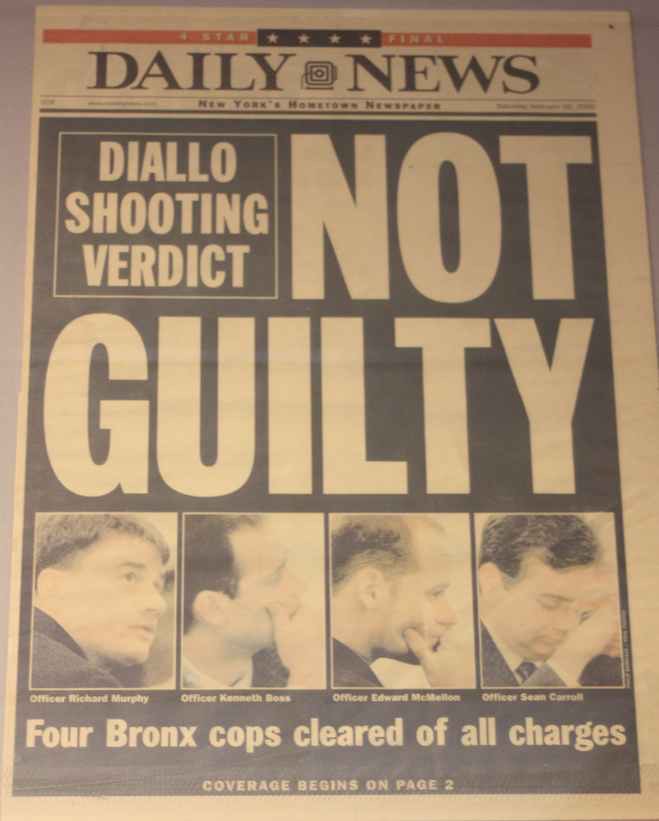

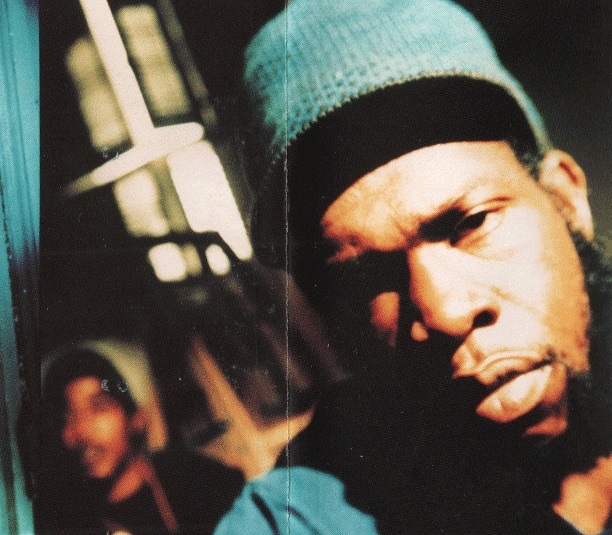

NYPD Using Poor Facial Recognition Technology for "Which-Hunts" (which NGHR did it?): Black Man Locked Up for 3 Days Based on Misidentification, was 8 Inches Taller and 70 lbs Heavier than Suspect

/ACCORDING TO FUNKTIONARY:

Which-hunt – a code-word in racist white supremacist police force parlance meaning “let’s hunt down which nigger did it.”

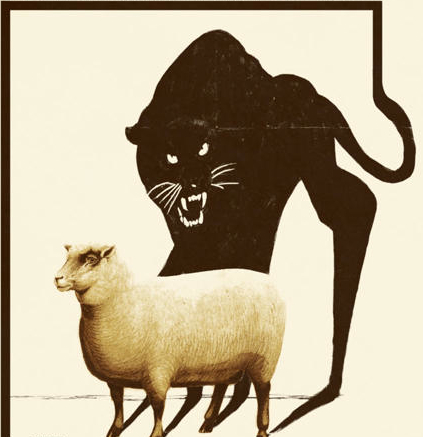

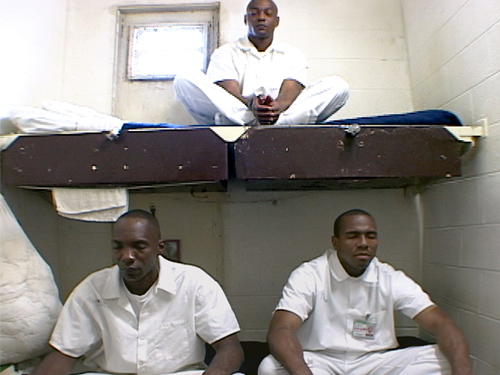

Which-Nigger – any native Black American who is routinely racially and spatially profiled for arrest as a likely target-suspect. A “Which Nigger” is never guilty by association—but guilty by simply being—a Black man in the wrong place at the wrong time near any alleged or actual crime, waiting for the “justice” railroad (oncoming train) and unaffordable legal representation thereby leaving him with a public defender that will ensure he will be afforded some extra time in prison.

From [HERE] Civil rights and privacy groups are demanding an investigation into the NYPD's alleged misuse of facial recognition technology after a false match led to a man being wrongfully arrested for a crime he did not commit.

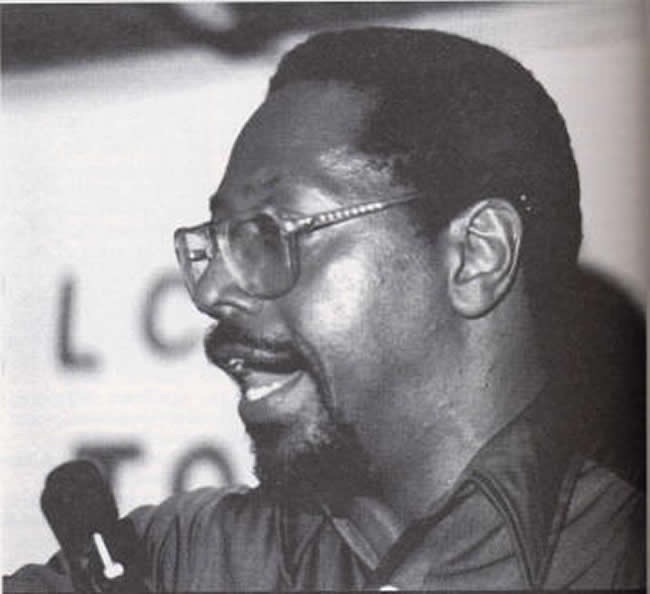

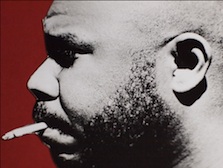

"I was so angry ... I was stressed out," Trevis Williams told Eyewitness News. "The man they were looking for, he was eight inches shorter than me and 70 pounds lighter."

Williams was falsely arrested and jailed for two days despite not matching the physical description given by the victim of a sex crime. The only similarities were that they were both Black men with locks, he says.

Location data from his cell phone showed that Williams was miles away from the crime, the New York Times first reported.

"Williams was driving from Connecticut to Brooklyn at the time another man was photographed flashing a woman in Manhattan's Union Square," the Surveillance Technology Oversight Project (STOP) said in a statement.

But Williams told Eyewitness News that officers falsely arrested him anyway two months later using the NYPD's facial recognition technology.

Prosecutors dismissed the case last month after Williams' public defenders, the Legal Aid Society, were able to prove he was falsely identified.

"This could have very easily been solved by just really traditional police work," said Diane Akerman, Staff Attorney with the Digital Forensics Unit at the Legal Aid Society (Legal Aid).

In a letter sent to authorities including Inspector General Jeanene Barrett, Legal Aid detailed an alarming pattern of false arrests based on facial recognition data, and claimed that the NYPD is relying on facial matches sourced from outside its approved photo database, and that the NYPD is relying on other city agencies, like the FDNY, to do what the NYPD is barred from doing.

The NYPD has legal regulations surrounding its use of facial recognition tech.

The NYPD also says that facial recognition technology has proven successful, and in a statement added that "even if there is a possible match, the NYPD cannot and will never make an arrest solely using facial recognition technology."

However, Legal Aid claims that the Special Activities Unit (SAU) within the NYPD's Intelligence Division is secretly working outside the bounds of these regulations and purposefully avoiding documentation of its illicit activities.

Legal Aid also accuses the FDNY of running facial recognition searches that fall outside the legal limits of NYPD policy, citing a June court case that found that the NYPD used the FDNY to skirt regulations by "identifying a suspect in a misdemeanor protest case using Clearview AI and DMV photos."

In this case, the court decided that law enforcement officials had misused facial identification and AI tools to illegally extract and alter surveillance images and as a result pinned a misdemeanor charge on a protestor.

"We work every single day to keep New Yorkers safe -- and that means using every tool at our disposal. Our fire marshals -- in their capacity as law enforcement agents -- work closely with our public safety partners, like the NYPD, to investigate crimes. This small group of elite law enforcement agents use facial recognition software as one of the many tools available, to conduct critical fire investigations," the FDNY responded in a statement.

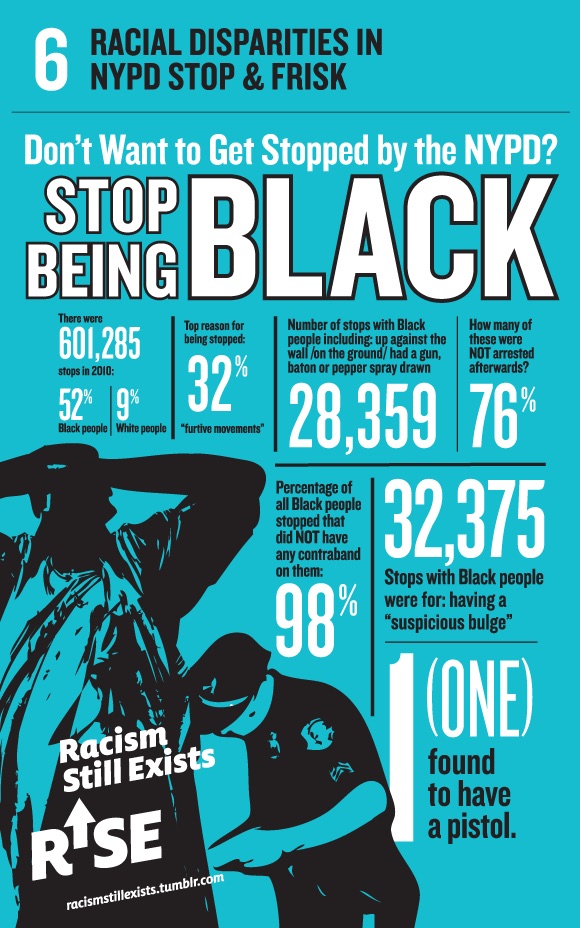

Civil rights advocates are also calling attention to racial and other biases embedded in facial recognition algorithms.

Facial recognition software "misidentifies individuals in poor quality photos and disproportionately misidentifies people of color, women, the young, and the elderly," STOP said in its 2021 report documenting the NYPD's use of facial recognition software.

"Because of the bias in who gets arrested in New York, it's going to disproportionately target Black and Latino and Asian individuals," said Albert Fox Cahn of STOP.

"Everyone, including the NYPD, knows that facial recognition technology is unreliable," said Diane Akerman, Staff Attorney with the Digital Forensics Unit at Legal Aid. "Yet the NYPD disregards even its own protocols, which are meant to protect New Yorkers from the very real risk of false arrest and imprisonment. It's clear they cannot be trusted with this technology, and elected officials must act now to ban its use by law enforcement."

As for Williams, he still faces the unearned consequences of his arrest.

"I was in the process of becoming a correctional officer at Rikers Island," he told Eyewitness News.

But after his arrest, he says "they kind of froze the hiring process."

"I hope people don't have to sit in jail or prison for things that they didn't do," Williams said.